Sentiment Analysis of a Crude Approach

A short write-up for quick and dirty sentiment classification and models.

Pre-requisite

- Python

- Data with Labels

Basic idea about what is being done:

- Make word vectors out of corpus text.

- Use word vectors to form sentence/document vectors.

- Apply ML classifiers to the word vectors to build a model.

- Predict.

I will be making use of the data set found on Kaggle. You can download it here. Since this is a quick and dirty approach, we will omit the text preprocessing part, such as stop words removal, etc. But just a reminder.

In Natural Language Processing (NLP) 90% of the work is preprocessing — Some Researcher

Moving on…

Let’s start by reading the data from the CSV/TSV file.

# Read csv file using pandas

train = pd.read_csv("data/train.csv")# Create an empty list to store the tokens

corpus = []for p in train.Phrase:

corpus.append(p.split())

Once the data is read, we will store the data in a list (corpus). The next step would be to create word vectors. We will be using the gensim package for this purpose. Have a look at the below code

# Create a word vector model with vectors of dimensions 25

model = Word2Vec(corpus, min_count=1, size=25)# Save the model in a file

model.save("model/model")

The vector space model will give us vectors that would look something like this:

# Output showing vector for word 'escapades'

In [35]: model["escapades"]Out[35]:array([ 0.01912756, 0.11313001, 0.05706277, 0.05470243, -0.07171227,-0.00395091, 0.01398386, 0.01066697, 0.01835706, 0.16320878,-0.09950776, 0.02733932, 0.0118545 , -0.00124337, 0.02434457,-0.11922658, -0.00507172, -0.12057459, -0.00341248, -0.01090243,-0.00488957, 0.0275436 , -0.0614472 , 0.05964575, -0.00052632], dtype=float32)

The vector is nothing but a mathematical representation of the occurrence of the word ‘escapades’ in the corpus.

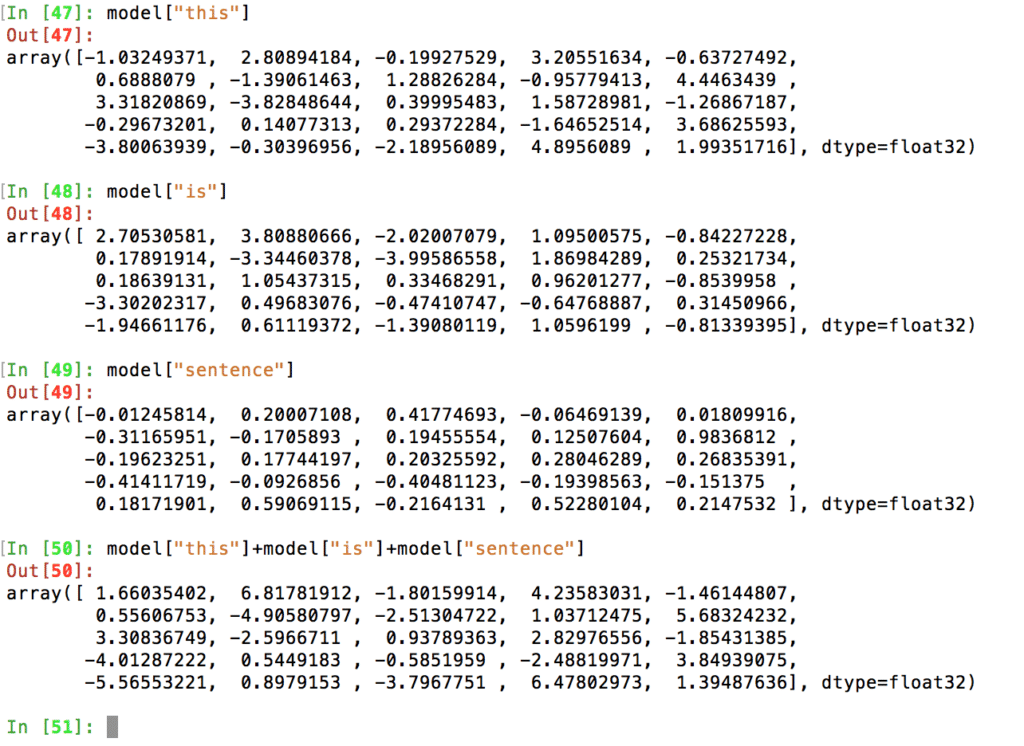

The best part about having word vectors is we can play with them, simply by adding or subtracting or performing various algebraic operation between multiple vectors.

The next step is to build document vectors. There can be many ways to do this. I will simply be adding the vectors for a document vector.

For e.g., “This is the sentence,” we will simply add the individual vectors for each word. As seen below

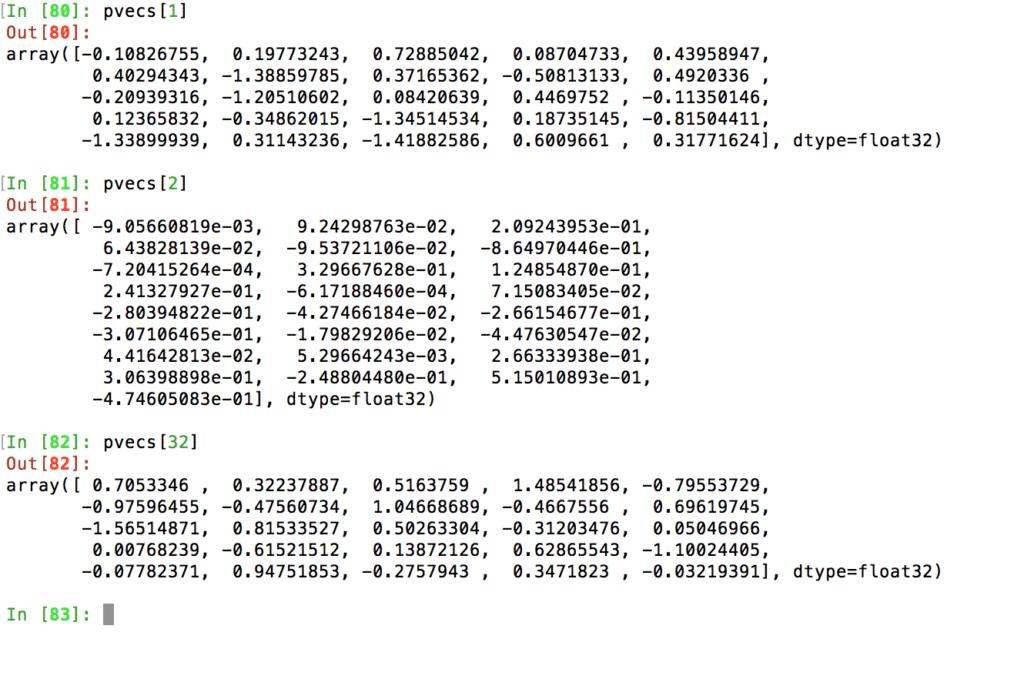

Now store these in some form of a dictionary.

pvecs = dict()for r in train.iterrows():

sid = r[1]["SentenceId"] phvec = sum([model[x] for x in r[1]["Phrase"].split()])

pvecs[sid] = phvec

The above code does the job of calculating the sentence/phrase vectors and stores those in a dictionary called pvecs.

After we have pvecs, let’s create a data frame that is more intuitive to a data scientist with features and label columns. The below code does the job of creating the PVDF data frame.

# Convert the dictionary into a dataframe

pvdf = pd.DataFrame.from_dict(pvecs, orient='index')# Rename columns

pvdf.columns = ["feat_"+str(x) for x in range(1,26)]# Add sentiment lable to pvdf

pvdf["label"] = train.Sentiment

The data frame will look something like this.

Now we can use our favorite python package, ‘sci-kit learn,’ to build a classification model. Let’s do that…

# Define and train a classifier

clf = svm.SVC()

clf.fit(pvdf[pvdf.columns[:25]], pvdf.label)

That feels good, ain’t it? Excellent, our classifier is trained. Now time to test it. Let’s get our test dataset out.

Before we do that, there is one thing we missed we had our labels as 0,1,2,3.. but we never discussed what they meant here,

0 — negative

1 — somewhat negative

2 — neutral

3 — somewhat positive

4 — Positive

Time to test, the below code takes a test phrase and classifies its sentiment.

# test.Phrase[0]# 'An intermittently pleasing but mostly routine effort .'# Lets create a phrase vector

y = pd.DataFrame(sum([model[x] for x in test.Phrase[0].split()])).transpose()# Make the final prediction

clf.predict(y)# outputs 2 => neutral

You can go ahead and try out a few more examples. The code can be found on Github here.

For any questions and inquiries, visit us at thinkitive

Right here is the perfect web site for anyone who hopes to find out about this topic. You understand a whole lot its almost hard to argue with you (not that I actually would want toÖHaHa). You definitely put a new spin on a topic that has been discussed for decades. Excellent stuff, just great!

I was excited to discover this website. I want to to thank you for your time for this wonderful read!! I definitely liked every part of it and i also have you book-marked to look at new things on your website.

Good post. I learn something totally new and challenging on blogs I stumbleupon every day. It will always be interesting to read through articles from other writers and use a little something from other sites.